Review of Polynomial Learning Time for Concept-Drift Data in Streaming Environment Based on Discard-After-learn Concept

Keywords:

discard-after-learn, streaming data classification, concept drift, non-stationary environments, hyper-ellipsoid functionAbstract

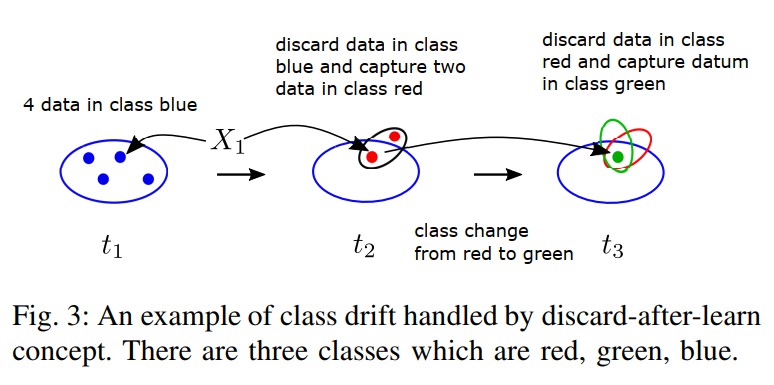

Concept drift or class drift is the situation where some whole learning data chunk occasionally change their classes at different times based the known conditional probability between the data and their classes. Some applications concerning the class drift are cybersecurity, surgery prediction, weather prediction. The class drift usually occurs in a data streaming environment. Learning, classifying, identifying classes of classdrift data in this environment are challenging problems in machine intelligence. Several methods were proposed to detect the occurrence of drift rather than to learning class-drifted data as well as querying their classes. Furthermore, the computational time complexity in those studies and memory overflow due to streaming scenario are not seriously focused. This paper reviews the series of polynomial space and time complexities of learning algorithms to handle class-drifted data in streaming environment based on the concept of discard-after-learn. A new neural network structure and the theorems of recurrence functions to compute the center, eigenvectors, and eigenvalues of the structure are summarized.

Downloads

References

J. Gama, I. Zliobaite, A. Bifet, M. Pechenizkiy, A. Bouchachia, A Survey on Concept Drift”, ACM Computing Survey, Vol. 1, No. 1, Article 1, January 2012

T.R. Hoens, R. Polikar, and N.V. Chawla, “Learning from Streaming Data with Concept Drift and Imbalance: An Overview,” Prog. Artif. Intell., vol. 1, no. 1, pp. 89-101, Apr. 2012.

D. Brzezinski and J. Stefanowski, “Reacting to Different Types of Concept Drift: The Accuracy Updated Ensemble Algorithm,” IEEE Trans. Neural Netw. Learn. Syst., vol. 25, no. 1, pp. 81-94, Jan. 2014.

S. Ozava, S. Pang, and N. Kasabov, “Incremental learning of chunk data for online pattern classification systems,” IEEE Trans. Neural Netw., vol.19, no. 6, pp. 1061-1074, June 2008.

S. Furao and O. Hasegawa, “A fast nearest neighbor classifier based on self-organizing incremental neural network,” Neural Netw., vol. 21, no. 10, pp. 1537-1547, Dec. 2008.

S. Jaiyen, C. Lursinsap, and S. Phimoltares, “A very fast neural learning for classification using only new incoming datum,” IEEE Trans Neural Netw., vol. 21, no. 3, pp. 381-392, Mar. 2010.

P. Junsawang, S. Phimoltares, and C. Lursinsap, ”A fast learning method for streaming and randomly ordered multi-class data chunks by using one-pass-throw-away class-wise learning concept”, Expert Systems with Applications 63 (2016) 249-266.

P. Junsawang, S. Phimoltares, and C. Lursinsap, ”Streaming chunk incremental learning for class-wise data stream classification with fast learning speed and low structure complexity”, learning speed and low structural complexity. PLoSONE 14(9): e0220624. https://doi.org/10.1371/journal.pone.0220624

M. Thakong, S. Phimoltares, S. Jaiyen, and C.Lursinsap, ”Fast Learning and Testing for Imbalanced Multi-Class Changes in Streaming Data by Dynamic Multi-Stratum Network”, IEEE Access, Volume 5, 2017, 10633-10648.

M. Thakong, S. Phimoltares, S. Jaiyen, and C.Lursinsap, ”One-passthrow-away learning for cybersecurity in streaming non-stationary environments by dynamic stratum networks”, PloS ONE 13(9): e0202937.

J. Zheng, H. Yu, F. Shen, and J. Zhao, “An online incremental learning support vector machine for large-scale data,” Neural Comput. Appl., vol. 22, no. 5, pp. 1023-1035, 2013.

H. Abdulsalam, D.B. Skillicorn, and P. Martin, “Classification using streaming random forests,” IEEE Trans. Knowl. Data Eng., vol. 23, no.1, pp. 22-36, Jan. 2011.

H. He, S. Chen, K. Li, and X. Xu, “Incremental learning from stream data,” IEEE Trans. Neural Netw., vol. 22, no. 12, pp. 1901-1914, Dec. 2011.

X. Wu, P. Li, and X. Hu, “Learning from concept drifting data streams with unlabeled data,” Neurocomputing, vol. 92, pp. 145-155, Sep. 2012.

I. Zliobait˙e, A. Bifet, J. Read, B. Pfahringer, and G. Holmes, ˇ “Evaluation methods and decision theory for classification of streaming data with temporal dependence,” Mach. Learn., vol. 98, no. 3, pp. 455-482, Mar.2015.

T. Tokumoto and S. Ozawa, “A fast incremental kernel principal component analysis for learning stream of data chunks,” in Proc. IEEE Int. Joint Conf. Neural Netw., 2011, pp. 2881-2888.

R. Elwell and R. Polikar, “Incremental learning of concept drift in nonstationary environments,” IEEE Trans. Neural Netw., vol. 22, no. 10, pp. 1517-1531, Oct. 2011.

D. Martinex-Rego, B. P´erez-S´anchez, O. Fontenla-Romero, and A. Alonso-Betanzos, “A robust incremental learning method for nonstationary environments,” Neurocomputing, vol. 74, no. 11, pp. 1800-1808, May. 2011.

H. Wang, W. Fan, P.S. Yu, and J. Han, “Mining concept-drifting data streams using ensemble classifiers,” in Proc. the ninth ACM SIGKDD, 2003, pp. 226-235.

A.A. Beyene, T. Welemariam, M. Persson, and N. Lavesson, “Improved concept drift handling in surgery prediction and other applications,” Knowl. Inf. Syst., vol. 44, no. 1, pp. 177-196, Jul. 2015.

A. Dries and U. Ruckert, “Adaptive concept drift detection,” J. Stat. Anal. Data Min., vol. 2, no. 5-6, pp. 311-327, 2009.

Downloads

Published

How to Cite

Issue

Section

License

Copyright and Disclaimer

Articles published in this journal are the copyright of Chulabhorn Royal Academy.

The opinions expressed in each article are those of the individual authors and do not necessarily reflect the views of Chulabhorn Royal Academy or any other faculty members of the Academy. The authors are fully responsible for all content in their respective articles. In the event of any errors or inaccuracies, the responsibility lies solely with the individual authors.